Abstract

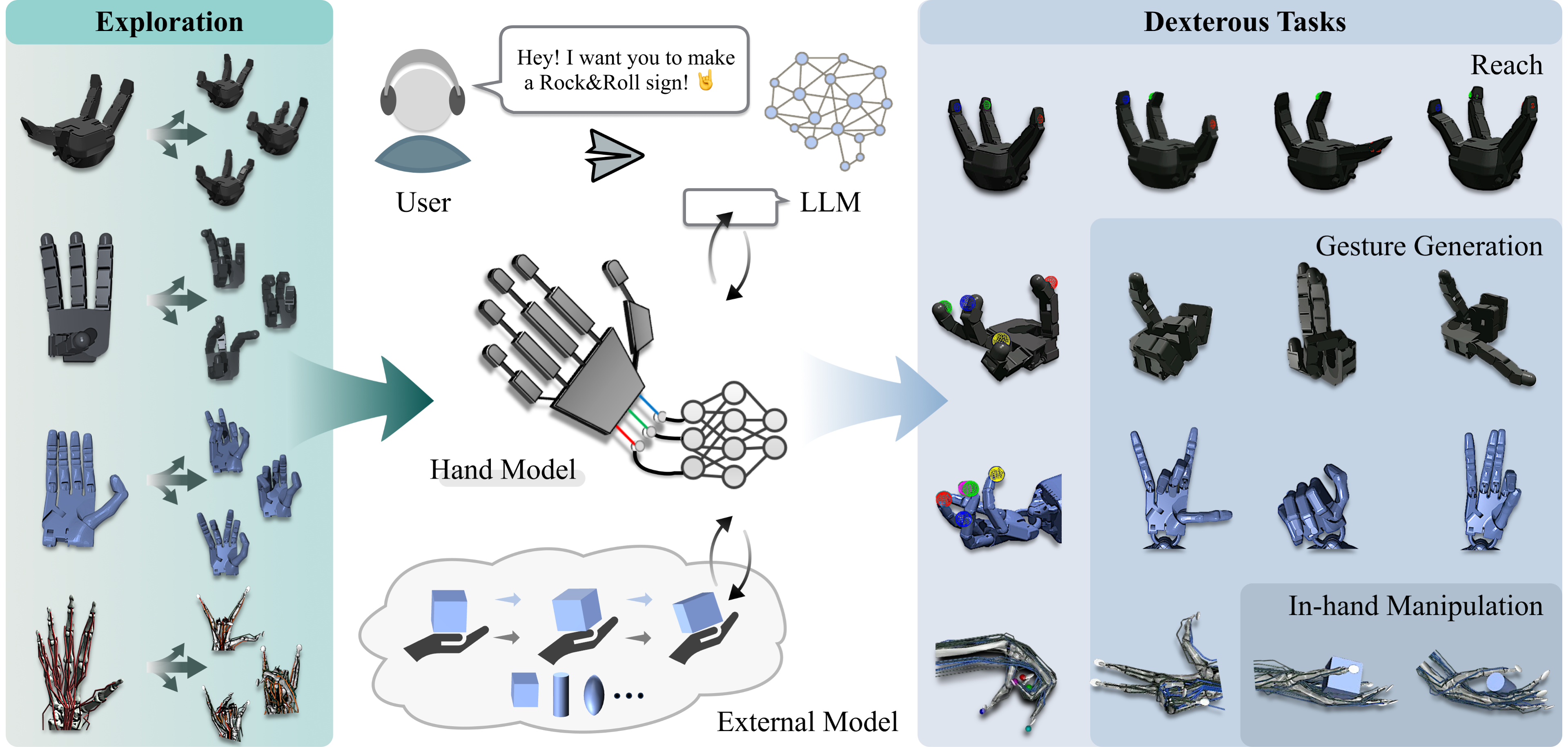

Controlling hands in high-dimensional action space has been a longstanding challenge, yet humans naturally perform dexterous tasks with ease. In this paper, we draw inspiration from the concept of internal model exhibited in human behavior and reconsider dexterous hands as learnable systems. Specifically, we introduce MoDex, a framework that includes a couple of neural networks (NNs) capturing the dynamical characteristics of hands and a bidirectional planning approach, which demonstrates both training and planning efficiency. To show the versatility of MoDex, we further integrate it with an external model to manipulate in-hand objects and a large language model (LLM) to generate various gestures in both simulation and real world. Extensive experiments on different dexterous hands address the data efficiency in learning a new task and the transferability between different tasks.

Method

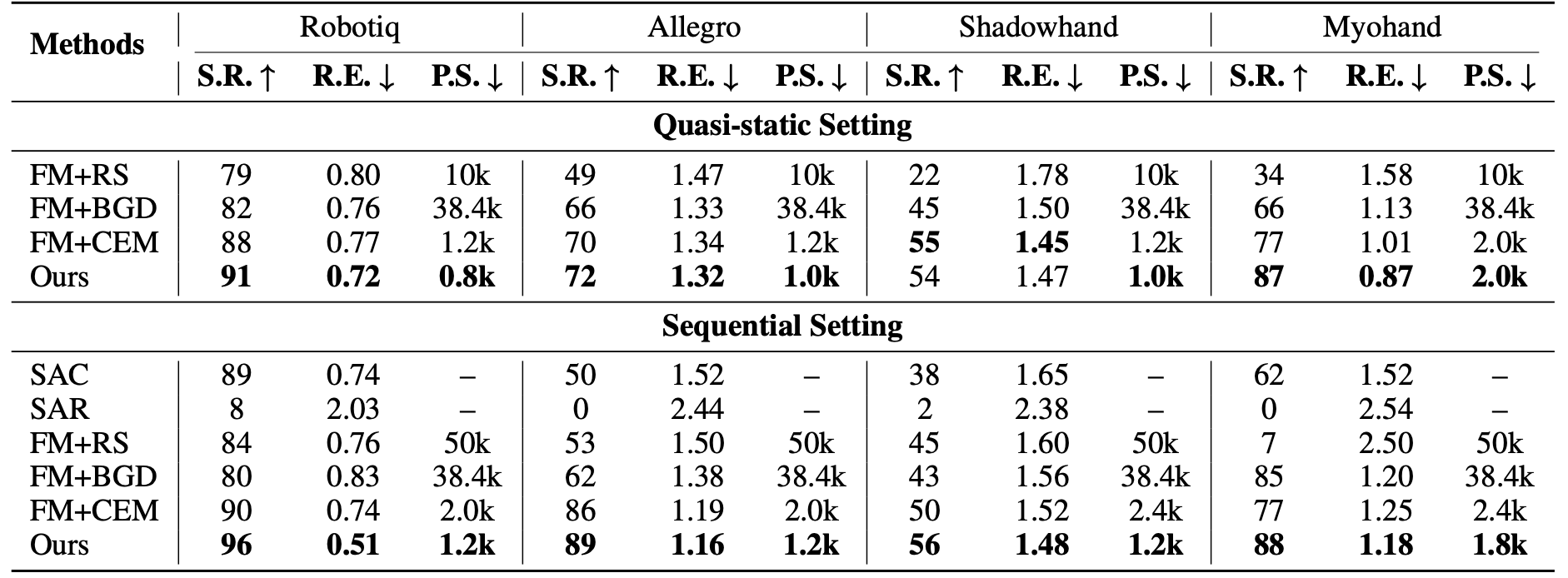

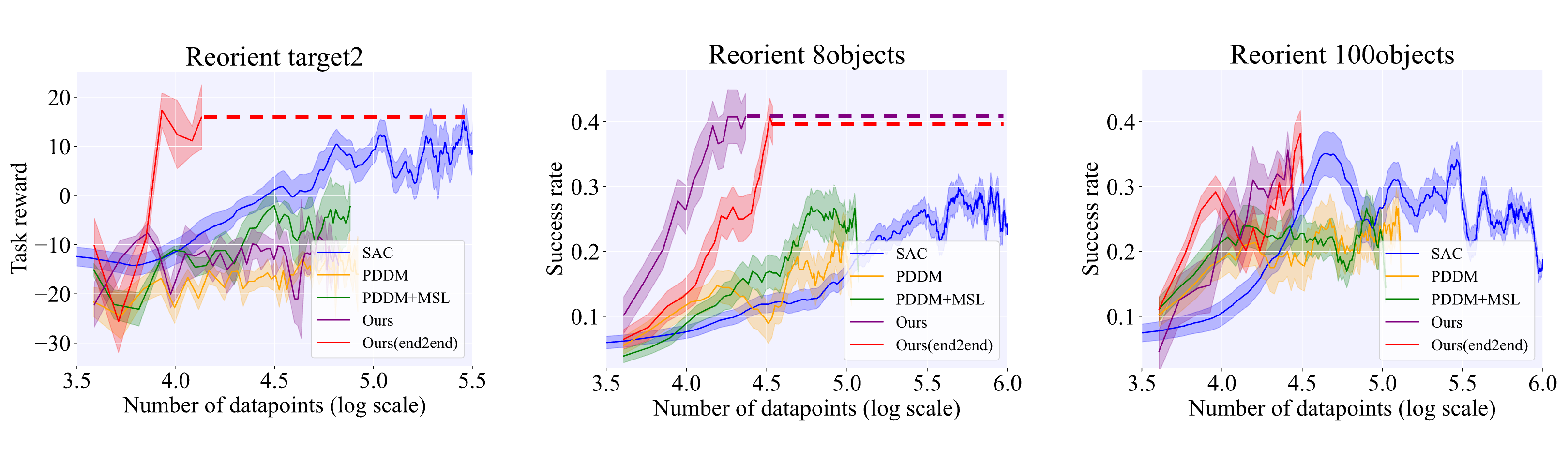

- We propose to model dexterous hands with Neural Networks (NNs). A hand model is composed of a forward model that depicts the forward dynamics and an inverse model that provides decision proposals. We further propose a bidirectional framework for efficient control, which integrates hand model with CEM planning.

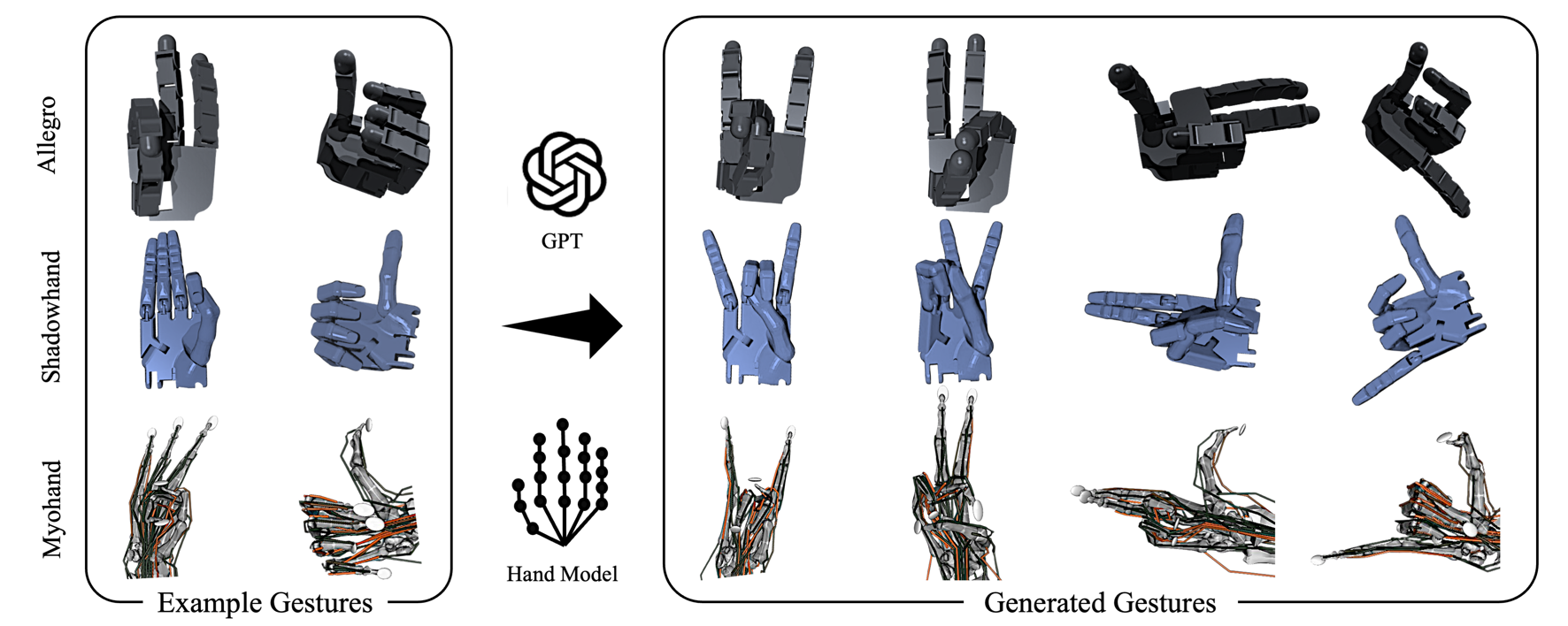

- We propose to combine a learned hand model with a large language model to generate gestures. We link these two independent modules via prompting LLM to yield cost functions for planning.

- We propose to realize data-efficient in-hand manipulation by learning decomposed system dynamics models.